MaxBatchDurationMillis − Maximum number of milliseconds to wait before closing a batch. MaxBatchSize − Maximum number of twitter messages that should be in a twitter batch. While configuring this source, you have to provide values to the following properties −

This source needs the details such as Consumer key, Consumer secret, Access token, and Access token secret of a Twitter application. Set the classpath variable to the lib folder of Flume in Flume-env.sh file as shown below.Įxport CLASSPATH=$CLASSPATH:/FLUME_HOME/lib/* The jar files corresponding to this source can be located in the lib folder as shown below.

We will get this source by default along with the installation of Flume. It connects to the 1% sample Twitter Firehose using streaming API and continuously downloads tweets, converts them to Avro format, and sends Avro events to a downstream Flume sink. The example given in this chapter uses an experimental source provided by Apache Flume named Twitter 1% Firehose Memory channel and HDFS sink. We have to configure the source, the channel, and the sink using the configuration file in the conf folder. $ hdfs dfs -mkdir hdfs://localhost:9000/user/Hadoop/twitter_data Browse through it and create a directory with the name twitter_data in the required path as shown below. In Hadoop DFS, you can create directories using the command mkdir. Localhost: starting nodemanager, logging to This command was run using /home/Hadoop/hadoop/share/hadoop/common/hadoop-common-2.6.0.jarīrowse through the sbin directory of Hadoop and start yarn and Hadoop dfs (distributed file system) as shown below. If your system contains Hadoop, and if you have set the path variable, then you will get the following output −įrom source with checksum 18e43357c8f927c0695f1e9522859d6a If Hadoop is already installed in your system, verify the installation using Hadoop version command, as shown below. Follow the steps given below before configuring Flume. Start Hadoop and create a folder in it to store Flume data.

#FLUME FOR PC INSTALL#

Since we are storing the data in HDFS, we need to install / verify Hadoop. These are useful to configure the agent in Flume. This will lead to a page which displays your Consumer key, Consumer secret, Access token, and Access token secret. Step 5įinally, click on the Test OAuth button which is on the right side top of the page. Click on it to generate the access token. Under keys and Access Tokens tab at the bottom of the page, you can observe a button named Create my access token. If everything goes fine, an App will be created with the given details as shown below. Step 3įill in the details, accept the Developer Agreement when finished, click on the Create your Twitter application button which is at the bottom of the page. While filling the website address, give the complete URL pattern, for example. You will be redirected to a window where you will get an application form in which you have to fill in your details in order to create the App. Step 2Ĭlick on the Create New App button.

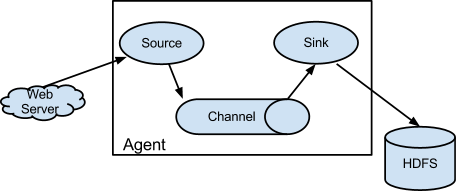

You will have a Twitter Application Management window where you can create, delete, and manage Twitter Apps. To create a Twitter application, click on the following link. Follow the steps given below to create a Twitter application. In order to get the tweets from Twitter, it is needed to create a Twitter application. To fetch Twitter data, we will have to follow the steps given below − We will use the memory channel to buffer these tweets and HDFS sink to push these tweets into the HDFS. In the example provided in this chapter, we will create an application and get the tweets from it using the experimental twitter source provided by Apache Flume. The channel buffers this data to a sink, which finally pushes it to centralized stores.

#FLUME FOR PC HOW TO#

This chapter explains how to fetch data from Twitter service and store it in HDFS using Apache Flume.Īs discussed in Flume Architecture, a webserver generates log data and this data is collected by an agent in Flume. Using Flume, we can fetch data from various services and transport it to centralized stores (HDFS and HBase).

0 kommentar(er)

0 kommentar(er)